List of internships at Sorbonne Université IPSL-SCAI available here: https://drive.google.com/drive/folders/1KUWYC16xPfn7yNy8POK5rLh8umeSPBO_

Blog

AI4Climate Seminar: High-resolution canopy height and wood volume maps in France based on satellite remote sensing with a deep learning approach – Martin Schwartz, 27th of March 11:00 CET at Kayrros

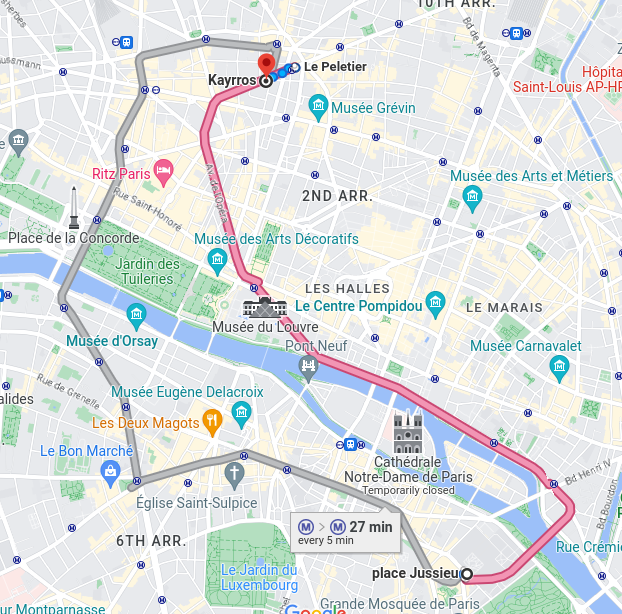

The seminar is on March the 27th, at 11:00 (CET) remotely and in person. The in-person meeting will be held in the Kayrros conference room, in Paris (map at the end of the post).

If you like to attend online, the link for the zoom is here: https://zoom.us/j/95758673139?pwd=blg3ZlhqVWw3Tk5EYUU0OEI3K3lvUT09

Short Abstract: In European forests that are divided into stands of small size and may show heterogeneity within stands, a high spatial resolution (10 – 20 meters) is arguably needed to capture the differences in canopy height. In this work, we developed a deep learning U-Net model based on multi-stream remote sensing measurements to create a high-resolution canopy height map of France. Our model uses multi-band images from Sentinel-1 and Sentinel-2 with composite time averages as input to predict tree height derived from GEDI waveforms. Thanks to forest inventory plots, we then turned this height map into a 10 m resolution wood volume map that we used to carry out wood volume estimations in different french regions. This works paves the way to a monitoring of biomass at high resolution and could bring key information for forest management policies.

Bio: Martin Schwartz is a young researcher who graduated from Ecole Polytechnique in 2020. During his gap year, he worked at Carbone 4, a leading consulting firm specializing in climate change and sustainability. Currently, Martin is in his 3rd year of PhD, supervised by Philippe Ciais and Catherine Ottlé at the Laboratory for Climate Science and Environment (LSCE). His research is focused on remote sensing and deep learning techniques applied to GEDI data, with the aim of advancing our understanding of forest canopy height and forest biomass at fine scale. His work has been done in collaboration with Kayrros which helped in the development of his research.

Kayrros: Kayrros is a French company that leverages advanced technologies like AI and satellite imagery to provide real-time insights and forecasting for the energy and commodity markets, with a focus on sustainability and environmental issues. They offer data-driven solutions for emissions, deforestation, and other environmental risks, helping businesses and governments make informed and sustainable decisions.

Location: Kayrros offices, Rue La Fayette, 75009 Paris. It is 18 minutes from Jussieu by metro.

AI4Climate seminar: Machine Learning for Climate Change and Environmental Sustainability – Claire Monteleoni – 6th of February 11:00CET

The seminar is on February the 6th, at 11:00 (CET) remotely and in person. The in-person meeting will be held in the SCAI conference room (map at the end of the post).

If you like to attend online, the link for the zoom is here: https://zoom.us/j/98108885974

Abstract:

Despite the scientific consensus on climate change, drastic uncertainties remain. Crucial questions about regional climate trends, changes in extreme events, such as heat waves and mega-storms, and understanding how climate varied in the distant past, must be answered in order to improve predictions, assess impacts and vulnerability, and inform mitigation and sustainable adaptation strategies. Machine learning can help answer such questions and shed light on climate change. I will give an overview of our climate informatics research, focusing on challenges in learning from spatiotemporal data, along with semi- and unsupervised deep learning approaches to studying rare and extreme events, and precipitation and temperature downscaling.

Bio:

Claire Monteleoni is a Choose France Chair in AI and Directrice de Recherche at INRIA Paris, an Associate Professor in the Department of Computer Science at the University of Colorado Boulder, and the founding Editor in Chief of Environmental Data Science, a Cambridge University Press journal, launched in December 2020. She joined INRIA in 2023 and has previously held positions at University of Paris-Saclay, CNRS, George Washington University, and Columbia University. She completed her PhD and Masters in Computer Science at MIT and was a postdoc at UC San Diego. She holds a Bachelor’s in Earth and Planetary Sciences from Harvard. Her research on machine learning for the study of climate change helped launch the interdisciplinary field of Climate Informatics. She co-founded the International Conference on Climate Informatics, which turns 12 years old in 2023, and has attracted climate scientists and data scientists from over 20 countries and 30 U.S. states. She gave an invited tutorial: Climate Change: Challenges for Machine Learning, at NeurIPS 2014. She currently serves on the NSF Advisory Committee for Environmental Research and Education.

Variational data assimilation with deep prior – Arthur Filoche

Our next seminar will be held on Wensday the 5th of October 2022, at 14h30 ECT, -not on the 30th of September as previously advertised-, at the Pierre et Marie Curie campus of Sorbonne Université, in seminar room 105 of LiP6, located on the first floor of the corridor 25/26 (easier access through tower 26).

The seminar can also be followed remotely through zoom here:

https://cnrs.zoom.us/j/99096155743?pwd=ZmlNb3BYWGFRWE5lSnlBdjNRRDRGZz09

Password : n5jBHd

You can ask questions during and after the talk, in the slack channel.

Arthur Filoche’s talk is entitled:

« Variational data assimilation with deep prior »

Data Assimilation remains the operational choice when it comes to

forecast and estimate Earth’s dynamical systems, and proposes a large panel of methods to optimally combine a dynamical model and observations

allowing to predict, filter, or smooth system state trajectory.

The classical variational assimilation cost function is derived from

modelling errors prior with uncorrelated in times Gaussian distribution.

The optimization then relies on errors covariance matrices as

hyperparameters.

But such statistics can be hard to estimate particularly for background

and model errors. In this work, we propose to replace the Gaussian prior

with a deep convolutional prior circumventing the use of background

error covariances.

To do so, we reshape the optimization so that the initial condition to

be estimated is generated by a deep architecture. The neural network is

optimized on a single observational window, no learning is involved as

in a classical variational inversion.

The bias induced by the chosen architecture regularizes the proposed

solution with the convolution operators imposing locality.

From a computational perspective, control parameters have simply been

organized differently and are optimized using only the observational

loss function corresponding to a maximum-likelihood estimation.

We propose several experiments highlighting the regularizing effect of

deep convolutional prior. First, we show that such prior can replace

background regularization in a strong-constraints 4DVar using a shallow

water model. We extend the idea in a 3DVar set-up using spatio-temporal

convolutional architecture to interpolate sea surface satellite tracks

and obtain results on par with optimal interpolation with fine-tuned

background matrix. Finally, we give perspective toward applying the same

method in weak-constrained 4DVar removing the need for model-errors

covariances but still enforcing correlation in space and time of model

errors.

Biographic notice:

Arthur Filoche is a Ph.D. student at the LiP6 of Sorbonne Université in France, under the supervision of Dominique Béréziat, Julien Brajard, and Anastase Charantonis. His research interests lie in combining deep learning and data assimilation

Eddy Detecting Neural Networks: harnessing visible satellite imagery and altimetry for operational oceanography – Evangelos Moschos

Our next seminar will be held on Wednesday the 11th of May 2022, at 14h00 ECT, at the Pierre et Marie Curie campus of Sorbonne Université, in seminar room 105 of LiP6, located on the first floor of the corridor 25/26 (easier access through tower 26).

The seminar can also be followed remotely through zoom here: https://zoom.us/j/98859268451

You can ask questions during and after the talk in the slack channel: https://tinyurl.com/AI4CLIMATESLACK

Evangelos Moschos’ talk is entitled:

« Eddy Detecting Neural Networks: harnessing visible satellite imagery and altimetry for operational oceanography »

Mesoscale Eddies are oceanic vortices with a typical radius of the order of 20–80 km. They live for days, months or even years, trapping and transporting heat, salt, pollutants, and various biogeochemical components from their regions of formation to remote areas. Eddies have a primordial role in the oceanic circulation modifying the surface currents as well as the mixed layer depth of the ocean. Thus, eddy detection and tracking is an emergent thematic of operational oceanography with advances in the last 10 years.

Eddies can be tracked on altimetry-derrived Sea Surface Height (SSH) and geostrophic velocity currents through standard detection methods. However the strong spatio-temporal interpolation of the altimetric observations raises uncertainty of detection. Satellite imagery, on the visible and infrared spectrum, contains signatures of eddies, which despite their fine-scale resolution are too complex to be processed by geometric based methods.

Machine Learning/Computer Vision methods have proved very prominent in exploiting complex remote sensing information, such as the eddy signatures on satellite imagery. We build a Convolutional Neural Network which can accurately detect the position, shape and form of mesoscale eddies in satellite Sea Surface Temperature (SST) images. Our CNN only misses 3% of coherent structures, with more than 20km radius and a clear signature on the Sea Surface Temperature, compared with a 34% miss rate of standard eddy detection methods on altimetric maps. Additionally, while standard altimetric detection has a 10% false positive rate (“ghost eddies”) the neural network detects less than 1% of ghosts.

By combining detections on data stemming from different sensors (here SSH & SST) we do also provide a set of reference nowcast (real-time) detections with almost zero uncertainty. We focus here on an application of validation of operational numerical oceanographic models in the Mediterranean Sea through the comparison of their outputs with the reference detections, allowing to quantify their nowcast error and pick-and-choose between different numerical on a certain region.

Biographic notice:

Evangelos Moschos is a PhD student at the Laboratoire de Météorologie Dynamique, Ecole Polytechnique, France. After an engineering diploma in the Athens Polytechnic, he developed a keen interest in bridging computer vision methods with earth observation and operational oceanography. Co-founder of Amphitrite, a start-up harnessing AI to provide maritime stakeholders with real-time, reliable oceanic data.

Towards the combination of physical and data-driven forecasts for Earth system prediction – Eviatar Bach (ENS Paris)

The seminar is on January the 26th, at 14:00 (CET) remotely and in person. The in-person meeting will be held in the SCAI conference room (map at the end of the post).

If you like to attend online, here is the link for zoom: https://us02web.zoom.us/j/84372436675?pwd=eWhwR2NXYllKUUpWOGU3OGEvUVR3Zz09

Eviatar Bach’s presentation is entitled:

«Towards the combination of physical and data-driven forecasts for Earth system prediction»

Abstract:

Due to the recent success of machine learning (ML) in many prediction problems, there is a high degree of interest in applying ML to Earth system prediction. However, because of the high dimensionality of the system, it is critical to use hybrid methods which combine data-driven models, physical models, and observations. I will present two such hybrid methods: Ensemble Oscillation Correction (EnOC) and multi-model data assimilation (MM-DA).

Oscillatory modes of the climate system are one of its most predictable features, especially at intraseasonal timescales. It has previously been shown that these oscillations can be predicted well with statistical methods, often with better skill than dynamical models. However, they only represent a portion of the signal, and a method for beneficially combining them with dynamical forecasts of the full system has not previously been developed. Ensemble Oscillation Correction (EnOC) is a method which corrects oscillatory modes in ensemble forecasts from dynamical models. We show results of EnOC applied to chaotic toy models with significant oscillatory components, as well as to forecasts of South Asian monsoon rainfall.

A more general method for combining multiple models and observations is multi-model data assimilation (MM-DA). MM-DA generalizes the variational or Bayesian formulation of the Kalman filter. However, previous implementations of this approach have not estimated the model error, and have therewith not been able to correctly weight the separate models and the observations. Here, we show how multiple models can be combined for both forecasting and DA by using an ensemble Kalman filter with adaptive model error estimation. This methodology is applied to multiscale chaotic models and results in significant error reductions compared to the best model and to an unweighted multi-model ensemble. Lastly, I will discuss the potential of this method for combining physical model forecasts, ML, and observations.

Bio

Eviatar Bach is a Make Our Planet Great Again (MOPGA) postdoctoral fellow in Michael Ghil’s group at the École Normale Supérieure in Paris. Previously, he obtained his PhD at the University of Maryland, College Park with Eugenia Kalnay and Safa Mote. He is currently working on improving geophysical forecasts with data assimilation and data-driven prediction methods, and is interested in understanding the nonlinear dynamics and predictability of the climate system.

What is the cost? Calculating the environmental impact of scientific calculus – Anne-Laure Ligozat

The seminar is scheduled for Friday the 3rd of December 2021 at 10:00 CET by Anne-Laure Ligozat and will be held both remotely and in person, in English, with slides in English. The in-person meeting will be held in the SCAI conference room (map at the end of the post).

Link to the zoom session: https://zoom.us/j/96346324217

Anne-Laure Ligozat’s presentation is entitled:

«Calculer quoi qu’il en coûte ? Coût environnemental du calcul scientifique »

(«What is the cost? Calculating the environmental impact of scientific calculus.»)

Abstract:

Dans ce séminaire, je présenterai les différents impacts

environnementaux dus au numérique, pour introduire ceux du calcul

scientifique. Les impacts seront envisagés aussi bien au niveau des

programmes informatiques, en indiquant les outils existants pour le

calcul de leur empreinte carbone et leurs limitations, qu’au niveau d’un

laboratoire de recherche.

/

In this seminar, I will be presenting the different environmental impacts of numerical computations, before focusing on those of scientific calculus. These impacts are analyzed both at the coding and program execution level, by showcasing the tools for monitoring their carbon impact and their limitations, as well as at the laboratory level.

Short bio:

Anne-Laure Ligozat est maîtresse de conférence en informatique à l’ENSIIE et au LISN à Saclay. Son thème de recherche est l’impact environnemental du numérique.

Anne-Laure Ligozat is an assistant professor in informatics at ENSIIE and at the LISN lab of Saclay. Her research interest is the environmental impact of informatics.

New ways for dynamical prediction of extreme heat waves: rare event simulations and machine learning with deep neural networks. – Freddy Bouchet (ENS Lyon)

The seminar is on October the 19th, at 14:00 (CEST)both in-person and remotely

Place of the seminar: « Campus Pierre & Marie Curie » of Sorbonne University. It will take place in SCAI seminar room, building « Esclangon », 1st floor

If you like to attend online, here is the link for zoom: https://us02web.zoom.us/j/82605468661

Freddy Bouchet’s presentation is entitled:

«New ways for dynamical prediction of extreme heat waves: rare event simulations and machine learning with deep neural networks.»

Abstract:

In the climate system, extreme events or transitions between climate attractors are of primarily importance for understanding the impact of climate change. Recent extreme heat waves with huge impacts are striking examples. However, it is very hard to study those events with conventional approaches, because of the lack of statistics, because they are too rare for historical data and because realistic models are too complex to be run long enough.

We cope with this lack of data issue using rare event simulations. Using some of the best climate models, we oversample extremely rare events and obtain several hundreds more events than with usual climate runs, at a fixed numerical cost. Coupled with deep neural networks this approach improves drastically the prediction of extreme heat waves.

This shed new light on the fluid mechanics processes which lead to extreme heat waves. We will describe quasi-stationary patterns of turbulent Rossby waves that lead to global teleconnection patterns in connection with heat waves and analyze their dynamics. We stress the relevance of these patterns for recently observed extreme heat waves and the prediction potential of our approach.

Climate Modeling in the Age of Machine Learning – Laure Zanna (NYU)

The seminar is on June the 23th, at 15:00 and will be held remotely, in english.

Link to the zoom session: https://us02web.zoom.us/j/85178591120

Laure Zanna’s presentation is entitled:

«Climate Modeling in the Age of Machine Learning »

Abstract:

Numerical simulations used for weather and climate predictions solve approximations of the governing laws of fluid motions on a grid. Ultimately, uncertainties in climate predictions originate from the poor or lacking representation of processes, such as ocean turbulence and clouds that are not resolved on the grid of global climate models. The representation of these unresolved processes has been a bottleneck in improving climate simulations and projections. The explosion of climate data and the power of machine learning algorithms are suddenly offering new opportunities: can we deepen our understanding of these unresolved processes and simultaneously improve their representation in climate models to reduce climate projections uncertainty? In this talk, I will discuss the current state of climate modeling and its future, focusing on the advantages and challenges of using machine learning for climate projections. I will present some of our recent work in which we leverage tools from machine learning and deep learning to learn representations of unresolved ocean processes and improve climate simulations. Our work suggests that machine learning could open the door to discovering new physics from data and enhance climate predictions.

Short bio:

Laure Zanna is a Professor in Mathematics & Atmosphere/Ocean Science at the Courant Institute, New York University. Her research focuses on the role of ocean dynamics in climate change. Prior to NYU, she was a faculty member at the University of Oxford until 2019 and obtained her PhD in 2009 in Climate Dynamics from Harvard University. She was the recipient of the 2020 Nicholas P. Fofonoff Award from the American Meteorological Society “For exceptional creativity in the development and application of new concepts in ocean and climate dynamics”. She is the lead principal investigator of M²LInES, an international effort supported by Schmidt Futures to improve climate models with scientific machine learning.

Working group 4: Marie Dechelle – Bridging Dynamical Models and Deep Networks to Solve Forward and Inverse Problems

21 Juin à 10h

Campus de Jussieu, Salle de réunion SCAI,

Bâtiment Esclangon 1er étage

Zoom: https://zoom.us/j/98265750503

Partially observed dynamical systems embrace a wide class of phenomena and represent an overwhelming majority of Earth science modeling, traditionally relying on ordinary or partial differential equations. Recent trends consider Machine Learning as an alternative or complementary approach to traditional physical models, allowing the integration of observations and potentially faster computations through model reduction. In this regard, latest works study the learning of the decomposition between model-based (MB) and data driven (ML) dynamical representations. However, learning such a decomposition with the sole supervision on the trajectories is ill-posed.

We introduce a learning algorithm to bridge model-based prediction and data-based algorithms, while solving the ill-posedness. This one relies on a cost function based on the computation of an upper bound of the prediction error, which enables us to minimize the contribution of the data driven algorithm while recovering physical parameters of the MB part. We evidence the soundness of our approach on a physical dataset based on simplified Navier-Stokes equations. We also present preliminary results on outputs of the ocean model NATL60.

L’Atelier interne « SCAI & AI4Climate » réunit les chercheurs, ingénieurs, doctorants, post doctorants concernés par les thématiques liées à conception et l’utilisation de nouvelles méthodes d’Intelligence Artificielle pour l’étude de l’environnement, allant du modèle à l’observation. Les premières réunions seront consacrées aux travaux des doctorants. L’exposé sera suivi d’une discussion avec les participants sur l’approche et les perspectives possibles du travail.