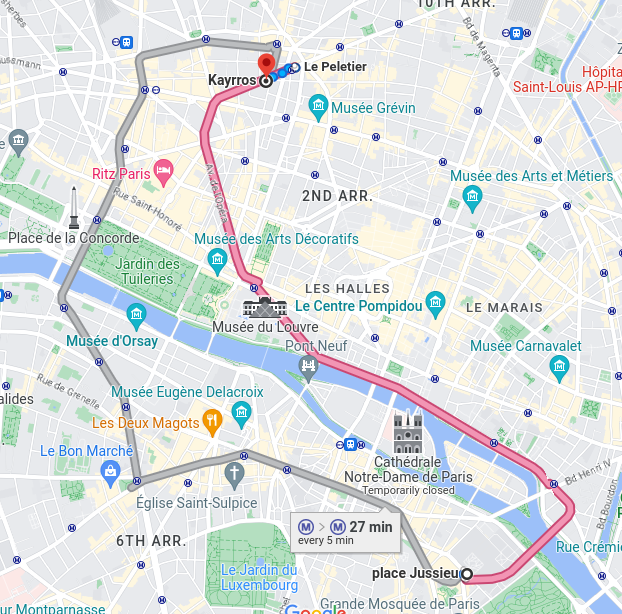

The seminar is on March the 27th, at 11:00 (CET) remotely and in person. The in-person meeting will be held in the Kayrros conference room, in Paris (map at the end of the post).

If you like to attend online, the link for the zoom is here: https://zoom.us/j/95758673139?pwd=blg3ZlhqVWw3Tk5EYUU0OEI3K3lvUT09

Short Abstract: In European forests that are divided into stands of small size and may show heterogeneity within stands, a high spatial resolution (10 – 20 meters) is arguably needed to capture the differences in canopy height. In this work, we developed a deep learning U-Net model based on multi-stream remote sensing measurements to create a high-resolution canopy height map of France. Our model uses multi-band images from Sentinel-1 and Sentinel-2 with composite time averages as input to predict tree height derived from GEDI waveforms. Thanks to forest inventory plots, we then turned this height map into a 10 m resolution wood volume map that we used to carry out wood volume estimations in different french regions. This works paves the way to a monitoring of biomass at high resolution and could bring key information for forest management policies.

Bio: Martin Schwartz is a young researcher who graduated from Ecole Polytechnique in 2020. During his gap year, he worked at Carbone 4, a leading consulting firm specializing in climate change and sustainability. Currently, Martin is in his 3rd year of PhD, supervised by Philippe Ciais and Catherine Ottlé at the Laboratory for Climate Science and Environment (LSCE). His research is focused on remote sensing and deep learning techniques applied to GEDI data, with the aim of advancing our understanding of forest canopy height and forest biomass at fine scale. His work has been done in collaboration with Kayrros which helped in the development of his research.

Kayrros: Kayrros is a French company that leverages advanced technologies like AI and satellite imagery to provide real-time insights and forecasting for the energy and commodity markets, with a focus on sustainability and environmental issues. They offer data-driven solutions for emissions, deforestation, and other environmental risks, helping businesses and governments make informed and sustainable decisions.

Location: Kayrros offices, Rue La Fayette, 75009 Paris. It is 18 minutes from Jussieu by metro.